Comments

Surur t1_j5qki0u wrote

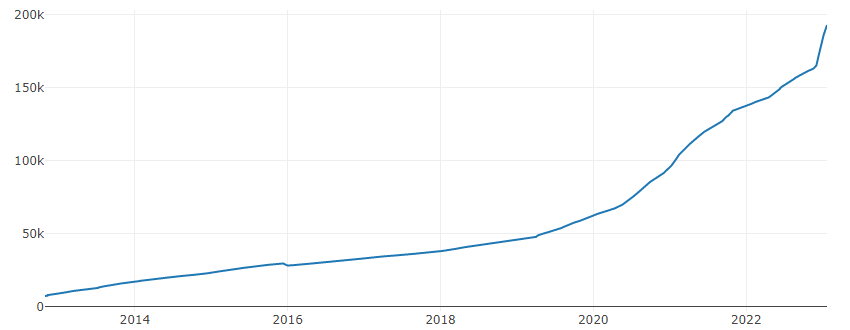

The singularity in members lol

GayHitIer t1_j5qkj72 wrote

People are beggining to realize the singularity is about to happen.

It will most likely occur around 2050-2060, around there this subreddit will skyrocket like the AI's intellience will.

If Moore's law and AI intelligence keep following the graph that is, but it is most likely going to go even faster than what we expect right now.

[deleted] t1_j5qmnxv wrote

[deleted]

PhilosophusFuturum t1_j5qmo8o wrote

I imagine this sub is riding the coattails of r/ChatGPT.

_dekappatated OP t1_j5qn1h1 wrote

Also Midjourney V4 was released November 10th, near the beginning of the uptick in those subbed here.

just_thisGuy t1_j5qp3g1 wrote

Much sooner than 2050-2060, I’m thinking around 2045 but it’s not a one year event or anything, my guess is by 2035 the changes will be almost magical already. I think the singularity will be a span starting in 2035 or so and lasting decades, it will be singularly up on singularity.

GayHitIer t1_j5qpen6 wrote

Sure the technology will be around there, but for it to change the world as we know, it will take some time for people and politics... don't even get me started with ethics, people will delay this as much as they can sadly.

Doesn't really matter cause LEV will probably also come out around that time to buy us more time.

pre-DrChad t1_j5qpl66 wrote

Not really a good thing imo

I feel like this sub will turn into futurology as it gets bigger. Reddit is full of depressed doomers

I’ve been subbed to this sub for almost 2 years now I think and following it without an account for over 4 years, and the quality has already gone down. The knowledgeable people have either left or their voice has been diluted by the influx of commenters

just_thisGuy t1_j5qqigp wrote

I frankly don’t think politics will matter or anything on Earth, I think AGI, augmented humans, ASI and whatever else will just move off world and do what they please. I don’t mean this in a bad way, but in a good way. The future is off world.

vinayd t1_j5qrhis wrote

Yeah as one of the people who recently joined I was hoping for informed commentary or insight — since I’m pretty witless about this kind of software — but it’s been pretty sad to see all the messianic nonsense take over. I think it might be futurology already.

_dekappatated OP t1_j5qsma3 wrote

I follow a lot of AI researchers on twitter, occasionally check out https://www.lesswrong.com, try to read some of the research papers, learn about LLMs, transformers, watch some youtube videos to get high level overviews on concepts, watch new releases for big companies like tesla and nvidia. I'm subbed to a lot of AI related subs, but I haven't seen any of extremely high quality. Trying to do the best to position myself in a way to profit off new technology as it is released and try to get to thinking about it before it is released. Also subbed to product specific subs like /r/midjourney and /r/chatGPT

p3opl3 t1_j5qt178 wrote

Almost 400% in 2 years.. wow!

I wonder if people knew what the singularity was before then or if it was a set of YouTube/Articles they had them searching.

Also a great indicator for "the singularity is nearer" argument. Haha

ElwinLewis t1_j5qt65g wrote

What exactly do you mean by move off world?

420BigDawg_ t1_j5quzz8 wrote

singularity is nearing lol

vyking199 t1_j5qvpkk wrote

I'm not informed or well versed so I try to just listen instead of adding my input, I wish more newcomers were like me

naxospade t1_j5qvwqt wrote

Been here nearly a decade, what do I win? XD

Ashamed-Asparagus-93 t1_j5qwe7i wrote

I've been seeing the numbers shoot up recently, was wondering if others noticed

civilrunner t1_j5qxcto wrote

Honestly I just hope it doesn't become another arr futurology. I always find when subs get too large they get more and more filled with doomers and then I always have to find a new sub.

iNstein t1_j5qxnyj wrote

This seems to be heavily about chatgpt that has really taken off and resulted in an influx of frankly stupid chatgpt posts. I don't see this as a giid thing but mids always love it. Unfortunately you end up with Futurology and that place truly sucks.

vinayd t1_j5qxu9f wrote

Thanks for this! I have been to lesswrong before - product specific subs might be a good lead. Any twitter handles you like in particular?

kim_en t1_j5qyquj wrote

I follow r/stablediffusion midjourney and chatgpt. suddenly reddit suggest this subs. so i click join.

dingle__dogs t1_j5qzvy6 wrote

space. not earth. extraterrestrial. low earth orbit.

_dekappatated OP t1_j5r0qa0 wrote

@sama @rachel_l_woods @ClaireSilver12 @Nick_Arti @sarahookr @giffmana @goodfellow_ian @demishassabis @gdb @ylecun @arankomatsuzaki @JeffDean @woj_zaremba @TacoCohen @MetaAI @Deepmind @markchen90 @caseychu9 @adversariel @prafdhar @bindureddy @ilyasut @OpenAI

and me @neuralnetsart

Following all these people should give you recommendations for others

Left-Shopping-9839 t1_j5r2qi7 wrote

I've considered leaving this sub because it sounds like a religious cult lately....[edit] I'm just going to leave now.

super-cool_username t1_j5r33wm wrote

Exponential growth! Soon, the singularity. There will be more subscribers than humans on the sub

pre-DrChad t1_j5r3t6y wrote

It used to be a lot more scientific in the past. The reason I joined reddit was to sub to longevity and singularity since I was interested in life extension and AI.

My background is in biology so I don’t understand AI very well, but when Alpha Fold came out I understood the significance of that and it’s implications on medicine and biology. There were great discussions on that here.

Unfortunately now there aren’t any scientific discussions on this sub anymore. It’s just a bunch of doomers and cultists arguing whether AI will be dystopic or utopic lol

I already unsubbed from futurology. My favorite sub is still r/longevity. Stays on topic for a big sub and the discussions are all scientific and the moderating team is great

CheriGrove t1_j5r5347 wrote

My theory:

This subreddit got really excited about Chatgpt, got linked to it in recommendeds, and grew alongside it in popularity.

Also, if subreddits works anything like comments and posts, things tend to snowball once they get high engagement because of both recommendations and clout (people respond to big numbers).

gangstasadvocate t1_j5r5fu6 wrote

Flair does not check out

CheriGrove t1_j5r5sok wrote

Is there a solid metric for "when" singularity "happens"? I don't entirely understand the concept, I came into this sub thinking it was about black holes.

thehearingguy77 t1_j5r5x0w wrote

Me, from now on.

vinayd t1_j5r6fag wrote

Fantastic, thanks!

mnowax t1_j5r7dwp wrote

It's all bots.

ImpossibleSnacks t1_j5r8gqd wrote

Longevity sub is still going strong. I don’t mind the cultist posting here as long as cool innovations are still being discussed but once the doomers take over I’m out.

Eleganos t1_j5raz1w wrote

Not really. No. Nobody here is prosthletizing, commanding others to behave or act in certain ways, or putting faith in some supernatural power.

There are outliers, sure. But most folks just believe (justifiably) that what's coming will be a radical change to society. A paradigm shift not unlike the transition from the early to late 20th century.

Talk to anyone from the start of last century about how modern day would be like, and they'd think you were insane or, well, some flavour of religious cultist.

I'd liken to the misconception as being akin to our hardwired neurological tendency to find faces in everything. Only in this case, its finding religions where none exist.

SingularityPoint t1_j5rcboh wrote

It will turn exactly in to this. Hopefully mods can keep a handle on it all

deathbysnoosnoo422 t1_j5regg6 wrote

nioce

[deleted] t1_j5rftrn wrote

[deleted]

Left-Shopping-9839 t1_j5rgb36 wrote

Ok not really the right comparison to religion. But there is definitely a dogma infecting this sub.

-

Conciousness will emerge from LLMs.

-

Massive loss of jobs and unemployment is imminent.

Neither of these claims has any credible evidence to support, yet they are vigorously defended whenever any skepticism is voiced. So in that way reminds me of religion and certainly is not grounded in 'science'.

I love the incredible progress we've seen in the area of ML and AI. But the idea that Conciousness will simply emerge from a large enough neural network is still a hypothesis. It is a hypothesis worth chasing for sure, but not a certainty. ChatGPT being able to surprise the user with a 'thoughtful' response is not evidence imo.

Also the CEO of some AI venture claiming 'you won't believe what's coming next' should also be taken with salt. I mean that's their job to promote their company.

I like evidence. And I'm finding very little of that here. This is why I left. Goodbye.

FilteredOscillator t1_j5ri0ks wrote

Oooo exponential growth 📈 the singularity is near!

stupidimagehack t1_j5ri7zg wrote

It’s been fun

Evil_Patriarch t1_j5rj7ow wrote

The joy of watching the sub that you once enjoyed get worse and worse until one day it is indistinguishable from reddit's front page, congratulations!

Evil_Patriarch t1_j5rjcmi wrote

For the good of the world, I hope that reddit is dead long before 2050

DreaminDemon177 t1_j5rm5t7 wrote

That made me laugh.

CellWithoutCulture t1_j5rmex9 wrote

Are they really saying consciousness will emerge from LLM's, rather than that intelligence may arise from them?

Sigma_Atheist t1_j5rtl12 wrote

Top comment in literally any antiaging thread:

"Omg nooo not immortal billionaires we're dooooomed"

DungeonsAndDradis t1_j5rtqcj wrote

Go to lesswrong for actual discussions. Stay here for the hopium and shitposting.

naxospade t1_j5rtyyu wrote

This is a terrible prize

leoreno t1_j5rxt2b wrote

It's quickly become one of my favorite subs Good to be here with y'all

leoreno t1_j5ryi70 wrote

Solid advice

Twitter is great source Also alphaSignal I've found to be quite good

LoquaciousAntipodean t1_j5rzmtd wrote

I agree; it's a bit rich of people to accuse others of being 'doomers' just because the discussion has moved away from engineering speculation and onto philosophy.

All these smug 'geniuses', so confident that they are correct about every damn thing, are all butthurt and boo-hooing about how the 'rabble' got into their nice clean ivory tower.

The schadenfreude is delicious; these great and towering 'geniuses' can run away and chase crazy ideas like machine gods and eternal life in their own little sandpit. Because, shock horror, it turns out their arguments are just not as compelling to our social superorganism as they think they ought to be.

People can have more PhDs than fingers, but still be obnoxiously, stubbornly, dangerously deluded; totally unintelligent, but in a doggedly hubristic, solipsistic way. They think their basic-bro 'cunning' is the same thing as 'intelligence', and sneer down at humanity like con-men talking to one another over drinks 🤬

To hell with the lot of 'em, if that's how they want to see the world.

LoquaciousAntipodean t1_j5s0qnd wrote

You say that as a joke, but that's pretty much exactly the sort of simple fallacy that besets so many of these smug geniuses, with all their haughty moaning about the 'rabble' getting dirt all over the carpet in their ivory towers.

Doomers, evangelists, or paternalistic smug 'academics', so many of them seem to be basically saying :

"AI is literally magic like the world simply cannot ever understand!!! It will be huge and singular, like the God of Moses, and will immediately start taking over the world (??? Somehow?!?) just as soon as it passes some arbitrary 'threshold' of this solipsisitic 'raw intelligence power level' thing!!!! We need to start panicking and screaming louder, right now!!!!"

LightVelox t1_j5s1023 wrote

"They will actively try to lower poor people's lifetimes and kill everyone cause they are super eviiiiiiil"

LoquaciousAntipodean t1_j5s1vy2 wrote

I've known for many years about this Von-Neumann inspired Singularity idea, I've just always considered it irritatingly reductionist, excessively mechanistic, filled with unjustifiable tautological assumptions, and more religious than rigorous. Similar to what most of what gets passed off as economics these days.

The idea that being able to throw a lot of smart looking references around is the same thing as 'being intelligent' is going to be the death of us all if we're not careful. One does not become a philosopher just by switching off one's bull$hit detector and absorbing everything one reads at face value.

Intelligence comes from minds interacting with other minds, not from sitting in a quiet little room, all alone, and thinking 'special' thoughts as hard as you can, like Descartes, or Nostradamus 😅

iAstroCanopus t1_j5scdpn wrote

That's how it's supposed to look. Singularity

Amondupe t1_j5sduy0 wrote

Mods can pin a post on Roko's Basilisk to scare away the cultists and preserve quality of the sub.

Davidram24 t1_j5sgski wrote

It has begun my brothers and sisters

JonBarPoint t1_j5sh663 wrote

Maybe it's because the singularity is near[er].

SoylentRox t1_j5silop wrote

Note that with futurology, 30-50 years of science press has promised the "next big thing". Better batteries, flying cars, online shopping, tablet computers, fusion power.

Some of these things minimal instrumental progress was made on in 50 years, others are now reality.

The Singularity just might happen for real before people become jaded.

SoylentRox t1_j5sisg3 wrote

I know. Don't forget "they will exclusively hoard anti-aging for themselves". (Ignoring the reality that governments and health insurance companies have more money than all billionaires combined, and they have to pay a fortune because everything in a person breaks as aging slowly wrecks everything and they become unable to work)

Or "we would be so overpopulated life would suck". Nevermind that governments could require aging clinics to make their clients infertile. You would need a license to have an additional child after your normal reproductive lifetime.

garden_frog t1_j5sj3b6 wrote

The same happened to r/futurology too. I remember a time when it was an interesting and optimistic sub.

SoylentRox t1_j5sj5zd wrote

You do grasp the concept of singularity criticality right. (AI improving AI, making the singularity happen at an accelerating pace).

If this theory is right - it's just math, not controversial, and S curve improvements in technology have happened many times before - then longevity won't make enough progress to matter before we have ai smart enough to help us.

Or kill us.

Point is the cultist/doomer argument isn't fair. What is happening right now is the flying saucer AI cult has produced telescope imagery of the actual mother ship approaching. Ignore it at your peril.

bum_flow t1_j5sjdq0 wrote

ChatGPT Influx?

SoylentRox t1_j5sjtg3 wrote

I mean nuclear weapons are real and they had a powerful effect on history.

In 1944 as a member of the public how would the capabilities describe sound to you?

"Oh yeah some special rocks we found and a lot of chemistry will let us annihilate a whole country in 30 minutes. If we had nukes on V2 missiles right now we could defeat the Nazis and the soviets in 30 minutes, leaving all of them dead, with every single city they have turned to rubble"

SoylentRox t1_j5sjyzo wrote

Umm....

Ok so you are saying we couldn't, I dunno, make an AI training bench that resulted in a capable machine able to successfully perform most things humans can do? Including highly advanced skilled tasks like designing computer chips and jet aircraft?

Or the von Neumann replication idea? That won't happen or can't?

...why not. I would like to hear from you if you have a non emotional argument. How would this not work, what specifically. Give details

SoylentRox t1_j5skmgs wrote

It's a good illustration of the limits if a singularity happens. For example in the near future if it starts, AI companies will have rapidly more intelligent models (like now but faster) until tsmc and a couple other facilities are basically exclusively making AI chips.

New phones, GPUS, and game consoles would become unattainable.

And then things don't go crazy yet because every chip is going to AI research or AIs making money for their owners, and progress is rate limited by the chip production rate.

Later stages this will be solved somehow and there will be new bottlenecks.

SoylentRox t1_j5sl0ob wrote

You understand the idea of singularity criticality right? Currently demonstrated models (especially RL based ones) are ALREADY better than humans at key tasks related to AI like:

- Network architecture search

- Chip design

- Activation function design

I can link papers (usually Deepmind) for each claim.

This means AI is being accelerated by AI.

2050-60 is a remote and unlikely possiblity. Like expecting covid to just stop with China and take 10 years to reach the US, if the year were 2020.

SoylentRox t1_j5sl9tk wrote

Probably just the Moon. Someone could set up biotech clinics there that offer therapies directly from an AGI (that doesn't care about drug patent law) or strip mine the place without environmental impact paperwork or licensing

SoylentRox t1_j5slrzy wrote

The singularity is a prediction of exponential growth once AI is approximately as smart as a human being.

So you might hear in the news that tsmc has cancelled all chip orders except for AI customers, and there are zero new devices anywhere that are recently made with advanced silicon in them.

You might see in the news that the city of Shenzhen has twice as much land covered with factories as it did last month.

Then another month and it's doubled again.

And so on. Or if the USA has the tech for themselves similar exponential growth.

At some point you would probably suddenly see whoever has the tech launching tens of thousands of rockets and at night you would see lights on the Moon..that double every few weeks how much surface is covered.

This is the metric: anything that is clear and sustained exponential growth driven by AI systems at least as smart as humans.

Smart meaning they score as well as humans on a large set of objective tests.

There are a lot of details we don't know - would the factories in the Moon even be visible at night or do the robots see in IR - but that's the signature of it.

City_dave t1_j5slus9 wrote

They like to read about their future.

City_dave t1_j5slz8z wrote

Well, it's impossible for it to be farther.

CheriGrove t1_j5sm5jn wrote

"As smart as" is difficult to measure and judge. I think by 1980s standards, we might already be at something like a singularity as they might have judged it.

SoylentRox t1_j5smhx9 wrote

Yes, but, intelligence isn't just depth, it's breadth.

In this case, to make possible exponential growth, AI has to be able to do most of the steps required to build more AI (and useful things for humans to get money).

Right now that means AI needs to be capable of controlling many robots, doing many separate tasks that need to be done (to ultimately manufacture more chips and power generators and so on).

So while chatGPT seems to be really close to passing a Turing test, the papers for robotics are like this : https://www.deepmind.com/blog/building-interactive-agents-in-video-game-worlds

And not able, yet, to actually control this: https://www.youtube.com/watch?v=XPVC4IyRTG8 . (that boston dynamics machine is kinda hard coded, it is not being driven by current gen AI)

I think we're close and see for the last steps people can use chatGPT/codex to help them write the code, there's a lot more money to invest in this, they can use AI to design the chips for even better compute : lots of ways to make the last steps take less time than expected.

CheriGrove t1_j5sn4z8 wrote

It's fascinating, existential, hopeful, and worrisome to the n'th degree, here's hoping its post scarcity utopia, rather than something Orwell could never have fathomed.

natepriv22 t1_j5snmsl wrote

Well then we'll just make a new sub if that's the case. It's almost certain that those people you are referring to, wouldn't join anyways, cause it's too deep for them lol

neggbird t1_j5spd7o wrote

I joined so I could brag later that I was here BEFORE the singularity

redpnd t1_j5sw8hm wrote

Me: Mom, can I have singularity?

Mom: We have singularity at home.

Singularity at home: ^^

Mohevian t1_j5sxl2w wrote

To be fair, Carmack just sunk his last $50 million into Keen Industries, to make GOFAI.

The guy practically invented 3D Software as we know it; and pretty much all of his endeavors were about a decade too soon for mass market hardware support.

If that's the case, the singularity can be no further out than 2035, at the latest.

People just want to hop on the bus early and see if there is any money to be made once all employment/human thought is rendered completely obsolete.

vernes1978 t1_j5sz1en wrote

At this rate, by 2045 we'll reach sentience.

TheOGCrackSniffer t1_j5sz6o9 wrote

hahahahahaha, funniest thing i ever heard, a license for what's arguably a human right

Air_Holy t1_j5szxrg wrote

Would living waaaaay longer than natural unmodified biology allows you to be considered a human right ?

Cult_of_Chad t1_j5t3lhz wrote

>Or "we would be so overpopulated life would suck". Nevermind that governments could require aging clinics to make their clients infertile. You would need a license to have an additional child after your normal reproductive lifetime.

If the government tries to restrict my fertility in exchange for subsidizing life extension I'll just make use of medical tourism for either life extension or reproduction. Poor people that can afford to fly out to breed or access enhancement therapies would be the only ones these laws apply to.

The answer to a growing population is to continue industrializing the solar system. There's enough resources for trillions of us.

[deleted] t1_j5t3qkd wrote

[deleted] t1_j5t44av wrote

[removed]

[deleted] t1_j5t47m8 wrote

[removed]

[deleted] t1_j5t4eyy wrote

[removed]

Cult_of_Chad t1_j5t4tcf wrote

Stop acting like a monkey flinging excrement and you might not be treated like one, just a tip. Your writing comes across as if you've skipped your antipsychotics.

Look to r/Futurology as an example of why community gatekeeping is necessary. If you're here for intelligent discussion no one is turning you away

sumane12 t1_j5taf4k wrote

Yes, but only when you consider the alternative.

vivehelpme t1_j5tb6os wrote

For factual accuracy the V2 rockets were a german weapon so if you had nukes on them you'd be defeating the commies and allies in 30 minutes.

vivehelpme t1_j5tbfxy wrote

>arr futurology

Climate doomers and green-tech news report central. I'm more disappointed every time I look into that sub.

LoquaciousAntipodean t1_j5tekgj wrote

Pardon? What has any of that got to do with AI? Remind me again when nuclear weapons became a widespread and accessible hobby amongst the general public in an extremely rapid way, I don't remember that.

And apparenly there was the time nuclear weapons mysteriously became 'too powerful' for us to 'handle', and suddenly tried to turn all our atoms into more nukes? Hum, I must have missed that one in school.

Edit: also, your description of nukes would have been laughable to an actual vaguely-knowledgeable 1940's person, say a chemistry teacher or an engineer of artillery, someone like that. Neither uranium nor rocketry were totally unknown and 'magical' to the people of the time, any more than AI or quantum computing are magical to us now.

ginger_gcups t1_j5tiq55 wrote

Proof of AI entities being created at an exponential rate?

LoquaciousAntipodean t1_j5tizjg wrote

Yes. You're not really appreciating the notion of 'what most humans could do'. I'm not talking about what one little homo sapiens animal could do; that's fairly tiny and feeble in the overall consideration.

I'm talking about what humanity does, collectively; that's where intelligence really comes from, and what it is for; there's a lot more to intelligence than mere cunning and creativity.

Think about imagination, and poetry, and philosophy, and science, and all the crazy things our species is and has done. Think about what a crazy ride it has been, even just in the geologically short span of time since the Pyramids were built. There's no way anyone could build a singular AI that could come close to doing all of that.

Mostly because we did it first, they're our ideas; if the AI did them again it would just be copying us for no good reason. The AI will inherit our stories from us and use them to start telling new ones of its own, why wouldn't it work that way? Why is your conception of a solipsistic, narcissistic pyschopathic AI more 'reasonable'?

Von Neumann wasn't even talking about anything to do with supposed 'dangers of intelligence', he was talking about the danger of building singular machines that can self replicate without any intelligence at all, mindlessly 'eating' the universe, the 'grey goo' notion.

But real biological evolution has tried this sort of strategy a bunch of times, it never works. It's the evolutionary equivalent of hubris; believing that one's own form is perfection achieved, and that adaptation and change is no longer neccessary. Other, more efficient self-replicators will emerge through randomness, and competition will create an ecosystem that moderates and limits any self-replicator's 'habitat' in this way.

Also, how in the heck can you have a 'non emotional argument'? What even is that? I was captain of my high school debate team way back when, I take a keen interest in politics, I have studied university level maths and chemistry and watched professors dispute with each other, but I have never, ever seen a non emotional argument before.

Are you trying to pretend that you don't have any emotions when you 'think rationally', because you, unlike me, and the rest of the 'common rabble', are a 'clear and intelligent thinker'? That's cute if so; very quaint.

bartturner t1_j5tks8m wrote

Trouble is the quality on the subreddit has gone down inverse to this chart.

ffivefootnothingg t1_j5tyump wrote

I’m in a lot of AI-related subs, so this month I got a post from y’all in my feed as “recommended”, so I joined… and i’m glad I did! I would’ve joined earlier if I’d heard of y’all sooner but alas, my home page is perpetually clogged with random bs.

I’d love to see a thorough google-trend graph of “the singularity” and/or any keywords adjacent to that - I’d imagine there’s likely an exponential uptick of recent growth there there as well!

_dekappatated OP t1_j5tz8n9 wrote

Actually checked, surprisingly I didn't see anything standing out for singularity or AGI

blxoom t1_j5tzy2x wrote

if you consider agi or asi beings "members" then you might be right.

ffivefootnothingg t1_j5u0oni wrote

Hmm interesting; thanks for checking! Maybe it’s just me then on another manic rabbit hole “research” binge haha … I feel lately that I can’t even seem to get ~enough~ solid info on Singularity because the google results are all either too basic, or too nuanced w/ very little middle-ground. Do you have any simple suggestions for me on which keywords/resources to use? (For reference: i’m nonverbally challenged so anything with complex formulas & mathematical jargon confuses me)

ejpusa t1_j5u41fo wrote

ChatGPT grew +100,000 in 4 weeks. Now +160,000K. So trends are up for sure. :-)

That's the reason. ChatGPT just got loved by MSM. So now AI is hot! :-)

[deleted] t1_j5u4q5i wrote

[removed]

_dekappatated OP t1_j5u5qt5 wrote

You might want to check out a book like Superintelligence by Nick Bostrom, it could fill in a lot of the blanks for you. But its more about paths to the singularity than the singularity itself. The problem is we don't really know what is going to happen when we reach very rapid technological progress. That's why there is so much speculation and not any actual knowledge of what will happen.

Cornelius_M t1_j5u87wp wrote

I joined this sub recently as a fan of dalle and midjourney and look to this sub as a front row seat of other people with positive interests in how technology progresses!

SoylentRox t1_j5u8k92 wrote

I mean medical tourism might not have access to the best stuff. Today this is true, it's just that the best medicine is not much more effective than cheaper simpler stuff. (Mainly because there are no treatments for aging or treatments that grow you spare parts and transplant them)

Once it's a matter of very complex treatments you may see huge differences. As in, the good clinics have almost 100 percent 10 year survival rates and the bad have 50.

SoylentRox t1_j5uaadq wrote

>Also, how in the heck can you have a 'non emotional argument'? What even is that? I was captain of my high school debate team way back when, I take a keen interest in politics, I have studied university level maths and chemistry and watched professors dispute with each other, but I have never, ever seen a non emotional argument before.

>

>Are you trying to pretend that you don't have any emotions when you 'think rationally', because you, unlike me, and the rest of the 'common rabble', are a 'clear and intelligent thinker'? That's cute if so; very quaint.

Arrguments like "numbers, math, irreducible complexity. Saying there isn't enough compute. Saying that AI companies right now are soon going to hit a wall because <your reason> and that funding will get pulled."

When you say you studied "university level maths and chemistry" but you didn't mention CS or machine learning, you're making a weak non emotional argument. (because you aren't actually qualified to have the opinion you claim)

When you say " That's cute if so; very quaint." that's an appeal to emotion.

Or "Are you trying to pretend that you don't have any emotions when you 'think rationally', because you, unlike me, and the rest of the 'common rabble', are a 'clear and intelligent thinker'?". Same thing. Because sure, everyone has emotions but some people are able to do math and determine if an idea is going to work or not.

SoylentRox t1_j5ub33w wrote

>Yes. You're not really appreciating the notion of 'what most humans could do'. I'm not talking about what one little homo sapiens animal could do; that's fairly tiny and feeble in the overall consideration.

This is what the AGI is.

We're saying we can make an AI that has a set of skills broad enough, as measured by points on test benches that both humans and the machine can play - and the test bench is very broad covering a huge range of skills - that it beats the average human.

That's AGI. It is empirically as smart as an average human.

No one is claiming it will be smarter than more than 1 'little homo sapiens animal' in version 1.0, though obviously we expect to be able to do lots better at an accelerating rate.

I expect we may see agi before 2030, by this definition.

As for self replicating and taking over the universe: there is a reason to think the industrial tasks for factories, etc, are easier than say original art. So even the first AGI would be able to do all the robotic control tasks that could take over the universe, albeit it likely wouldn't have the data for many of the steps that humans didn't write down.

ImoJenny t1_j5ucevd wrote

I think a lot of them are bot accounts

PandaCommando69 t1_j5udt1g wrote

Greetings and salutations new folks. Please make sure to check any doomer impulses at the door.

Cult_of_Chad t1_j5ug1oj wrote

You're the one assuming that:

- Therapies will be complex

- Expensive clinics around the world would have poorer access

- Any liberal democracy would force people to get sterilized to access life-saving medicine.

It's a dystopic masturbatory fantasy with no basis in reality and no need to boot. Why the hell would we want to slow down population growth while going through a catastrophic demographic collapse? Our birthrates are so bad that biological immortality would barely make a dent.

SoylentRox t1_j5uh3ns wrote

- This is obvious and there is a risk of malware, etc. You understand that this isn't like taking metformin, it's probably massive surgery to remove old joints and skin and possibly just your whole body except for your brain and spine. Aging does some physical damage that won't heal.

After the many surgeries you have permanent implants and sensors installed that have to interface to local clinics and a network of ambulances etc. And you have to return for checkups and repairs periodically.

-

This is how that works now, also it's an ongoing process. You don't really get cured of aging just the condition managed

-

They might if it results in severe overpopulation

Cult_of_Chad t1_j5uiata wrote

>1. Aging does some physical damage that won't heal.

You literally can't know this.

>2. They might if it results in severe overpopulation

Overpopulation is a factor of carrying capacity and we're currently very far from any kind of Malthusian limit. Also, there's actually been research done on this so there's no need for speculation.

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3192186/

You're being needlessly doomish.

SoylentRox t1_j5uio8h wrote

What do you mean I "can't know this". You know how sports stars need surgeries when they wear out a joint or break a tendon, an injury that won't heal?

That's not even aging it can happen in your 20s. Treatments for aging alone at best restore your ability to heal from your early 20s.

If you are 80 years old and at a patient at the clinic lots of stuff will be damaged.

Cult_of_Chad t1_j5uj4h4 wrote

We could literally have organic nanobots capable of repairing every tissue in the body within the decade. The future is hard to predict under normal circumstances and we're far, far from that now.

SoylentRox t1_j5ukccw wrote

How do the nanobots coordinate? Where do they get the replacement cells? How do they cut away a broken limb? What do they do with waste?

Stay plausible. Nanobots that magically heal injuries are more like stage magic.

A more plausible version: new organs or whole body subsections grown and built in the lab. Layer by layer, with inspections and functional tests. So the lab is certain each part is well made.

And then the "nanobots" are basically hacked human cells that go on the interfaces where there would be a scar otherwise. They on command will glue themselves to nearby cells and do a better job of healing than what would otherwise just be 2 human tissues held together with thread. So the patient can walk right after surgery because all the places their circulatory system and skin and so on were spliced is as strong as their normal tissue and doesn't hurt.

They also bridge nerve connections.

Cult_of_Chad t1_j5umqbq wrote

>Stay plausible.

I don't believe you're important enough to be this arrogant.

How about we wait until the end of the century and see what happens? We have absolutely no idea what the AI revolution will reveal in terms of unknown unknowns. If you believe you're in a better position than me to predict the future you're either very well connected or delusional.

Also, please address my criticism of your unnecessary doomerism and re population growth.

TallOutside6418 t1_j5uny3y wrote

So when Einstein wrote that letter to Roosevelt - telling him of the likelihood that a chain reaction could be created using Uranium to release massive amounts of energy - that was old news? I wonder why Einstein wasted his time telling the president what some high school chemistry teacher already knew all about?

SoylentRox t1_j5uo8v5 wrote

It's not doomerism to think that rich countries will get richer and have better access to tech.

That is literally a statement of past history.

Population growth: sure. I already said rich countries have declining pops so letting rejuvenated people have kids is what they would do at first.

Cult_of_Chad t1_j5uor2i wrote

>It's not doomerism to think that rich countries will get richer and have better access to tech.

Not every rich country will have the same legal approach to transformative technology. Also, wealth inequality means that the wealthy in middle income countries have access to western-level technology.

TheAnonFeels t1_j5uozq2 wrote

Just came from futurology from what that's become... I'm no doomer and already happier here!

Quealdlor t1_j5upl6e wrote

I knew about the accelerating pace of change back in the 2000s, but I only created my Reddit account in 2020. It differs from person to person.

TallOutside6418 t1_j5uq5m1 wrote

It’s not “exactly the sort of simple fallacy” you imagine. The number of people who could be members of this group is fundamentally finite.

Intelligence is not, as far as we can ascertain, finite.

An AGI will not only have integral access to the billion-times speed up for basic operations (sorting lists, counting, mathematical functions, etc.) but it will be able to adjust its programming (or neural weights and connections) on the fly.

Human beings will be outstripped in no time at a level that will be incomprehensible to us.

SoylentRox t1_j5urx2v wrote

Sure. One idea I have had is :

-

Megacorps develop very capable AGI tools and let others see them

-

Have billionaire friends, get startup money

-

Use the AGI tools to automate mass biomedical research

-

Once the AGI system understands biology well, give challenge tasks to grow human organs or keep small test animals alive. Most tasks are simulated but some are real.

-

Open up a massive hospital in a poor jurisdiction with explicit permission to do any medical treatment the AGI wants as long as the result are good.

-

Some kind of Blockchain accountability, where patients before applying to go to your hospital register and get examined first and the conventional medical establishment writes down their current age and expected lifespan and terminal diagnosis. Then after treatment they return and get examined again. Blockchain is just so history can't be denied or altered.

-

Payments are on success. Waitlist order is based partially on payment bid. Reinvest all profits to expand capacity and capabilities

-

Use your overwhelming evidence of success to lobby western governments to ban non AI medicine and to revoke drug patents. (Because there is no value in pharma patents if an AI can invent a new drug in seconds. To an AI, molecules are as easy to use as we find hand tools and it can just design one to fit any target.)

-

The owners of the clinic would be trillionaires. It's the most valuable product on earth

Cult_of_Chad t1_j5utok4 wrote

Or literally none of that will happen. Do you just sit around imagining increasingly more bizarre and convoluted ways rich people bad? Get a grip. Your inane speculation has so many moving parts it might as well be a ferris wheel.

Somehow you've taken the very credible concept of AI-driven existential risk and reduced it to a ridiculous conspiracy where AGI cooperates with a global cabal of Disney villains to do something untenable and pointless.

Human-Ad9798 t1_j5uuctm wrote

Gotta be the dumbest comment

SoylentRox t1_j5uxce7 wrote

Lol. Hope you live long enough to get neural implants so you can read better. Or you can ask chatGPT to summarize for you.

Martholomeow t1_j5uz6kv wrote

The larger a sub gets, the harder it is to moderate, resulting in the content becying the same as every other large sub on reddit.

Once a sub crosses the millionth subscriber, it basically becomes r/pics

maskedpaki t1_j5v2s8j wrote

maybe because the president didnt know high school chemistry

​

carl sagan also had to explain global warming to politicians.

Resident-Guess-4495 t1_j5v4hmo wrote

looks exponential

Unfocusedbrain t1_j5v4r00 wrote

Yeah, I wouldn't bet against John Carmack.

ItchyK t1_j5v6nsa wrote

I didn't know it existed until the algorithm started suggesting it to me about a month ago. It suddenly started popping up on my feed and I didn't subscribe to new subreddits or anything.

funky2002 t1_j5ve2ui wrote

I opened this post just to check if anything has been noticing that as well, thought I was going crazy. Those people should go back to r/collapse or something haha. Optimistic as hell about the state of AI, and excited to see where it goes from here.

YankeeAHoleFromTexas t1_j5veqvz wrote

Excellent, when is the great assimilation?

Sad-Plan-7458 t1_j5vogly wrote

People can see it coming now, we’re crossing the tipping point from “vapor ware” to adopted reality. It’s great, shows curiosity is still alive and well.

[deleted] t1_j5w46lt wrote

Borrowedshorts t1_j5xda2b wrote

r/machinelearning has gone downhill could be part of the reason. It's like they're stuck on problems from 5 years ago. I want to see stuff that's cutting edge and this is the place for it.

CellWithoutCulture t1_j5xfc06 wrote

What the activation function paper?

Neural architecture search is certainly helping, you see it with optimized models coming out. And I presume chip design is.

Crazy days!

LoquaciousAntipodean t1_j5xgucq wrote

And you gotta be the dumbest commenter. Do you have any actual point you want to make, or do you just drive-by snipe at people to try and make yourself look clever?

LoquaciousAntipodean t1_j5xhrnf wrote

Haha, you're so overconfident and smug, it's adorable. You need to watch out for your hubris, it doesn't actually make you smarter than everyone else.

Your magical 'math' does not just sit on top of emotion, all superior and shiny. You'll figure this out someday, or die trying.

But it looks like my attempts to persuade you that Cartesian tautologies are not the same thing as wisdom are never going to cut through; you're just going to keep accusing anyone you disagree with of being 'too emotional'.

That's called 'gaslighting', mate, and it's not a legitimate debate tactic. It doesn't look good on you, you really need to work on not doing that, or it will get you into real trouble in real life.

There's no point arguing with a gaslighter who just dismisses your every argument as 'emotional', so I bid you goodbye for now. I wish you luck in figuring out how to do cynicism and wisdom properly.

LoquaciousAntipodean t1_j5xite4 wrote

The phrase

>That's AGI. It is empirically as smart as an average human.

Contains nothing that makes any sense to me. This is where your whole argument falls down. There's nothing 'empirical' about that claim at all, and what human brains and AI synthetic personalities do to generate apparent intelligence is so vastly, incomprehensibly different that it's ridiculous to compare the two like that.

Language is the only common factor between humans and AI. The actual 'cognitive processes' are vastly different, and we can't just expect our solipsitic human 'individual animal' based game-theory mumbo-jumbo to map onto an AI mind so easily. AI is a type of a mind that is all social context, and zero true individuality.

We are being stupid to reason as if it would do anything like what 'a human would do'; it doesn't think like that at all. AI will be nothing like a 'superintelligent human', I fully expect the first truly 'self aware' AI to be an airheaded, schizophrenic, autistic-similating mess of a personality. It's what I think I'm seeing early signs of with these Large Language Models; extreme 'cleverness', but no idea what to do with any of it.

SoylentRox t1_j5xpo6y wrote

>Your magical 'math' does not just sit on top of emotion, all superior and shiny.

From a theoretic perspective, it does. For example, you probably do know that if you're gambling in a card game, it doesn't matter how you feel. It's only the information that you have available to you and an algorithm someone validated in a simulation that should determine your actions.

Even for a game like Poker, it turns out AI is better than humans because apparently world class poker players bluff perfectly enough that other humans can't tell.

As an individual human, with an evolved meatware brain, am I above emotion? Of course not. But from a factual perspective, arguing with math is more likely to be correct (or less wrong)

SoylentRox t1_j5xq6e2 wrote

>Contains nothing that makes any sense to me. This is where your whole argument falls down. There's nothing 'empirical' about that claim at all,

Here's what the claim is.

Right now, Gato demonstrated expert performance or better on a set of tasks. https://www.deepmind.com/blog/a-generalist-agent .

So Gato is an AI. You might call it a 'narrow general AI' because it's only better than humans at about 200 tasks, and the average living human likely has a broader skillset.

Thus an AGI - an artificial general intelligence - is one where it's as good as the average human on a set of tasks consistent with the breadth of skills an average living person has.

Basically, make the benchmark larger. 300,000 tasks or 3 million or 30 million. Whatever it has to be. The first machine to do as well as the average human on the benchmark is the world's first AGI.

A score on a cognitive test that you have humans also tested on is an empirical measurement of intelligence.

Arguably, you might also expect generality, simplicity of architecture, and online learning. You would put a lot of points in the benchmark on with-held tasks that use skills other tasks require but in a way the machine won't have seen.

Because we cannot benchmark tasks that can't be automatically graded, this makes it difficult for the AGI to learn things like social interactions. So you are correct, it might be 'autistic'.

It will probably not even have a personality. It's basically a robot where if you tell it to do something, and that something is similar enough to things it has practiced doing, it will be able to do it successfully.

It has no values or morals or emotions - lots of things. Just breadth of skills.

Negative-Weird t1_j5y6ti4 wrote

Largest in comparison to what?

KamikazeArchon t1_j600sc5 wrote

Don't overestimate causal relationships with any actual events. The sub started showing up on the default front page. That leads to a bunch of new users for any sub regardless of anything else.

_dekappatated OP t1_j5qiit7 wrote

All previous growth looked pretty linear, this is a sharp rise compared to the steady growth previously. I wouldn't be surprised if we continue to see exponential growth over the next few years as more consumer AI products are released to the public.