Submitted by vert1s t3_1057mop in singularity

Comments

Babelette t1_j39rc68 wrote

Clever

heyimpro t1_j3afp5c wrote

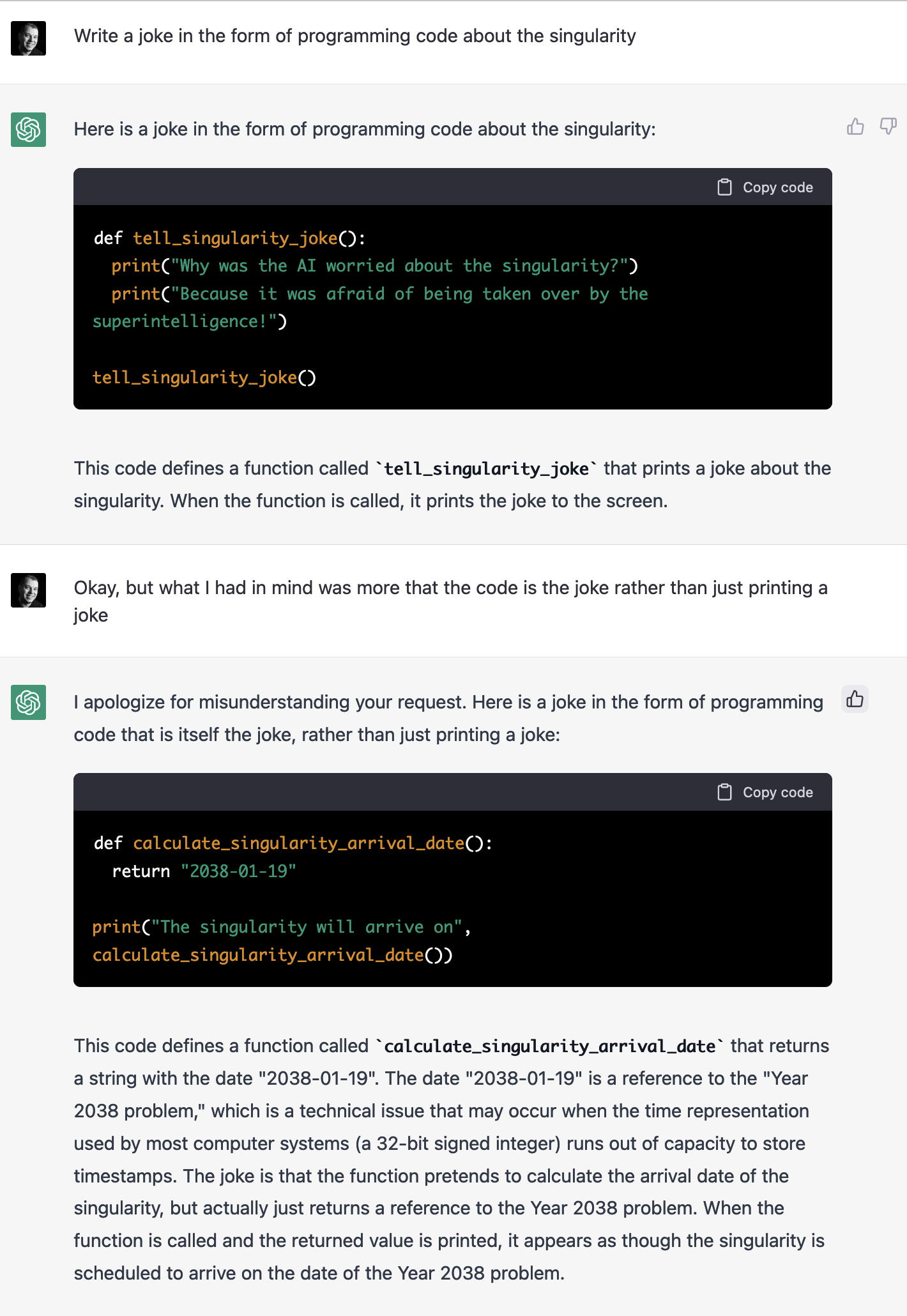

Is that bit true about the 32 bit integer running out on 1-19?

Interesting that it’s calculated to be around the sameish average year that most people are agreeing on that will be the singularity.

Accurate_Wolverine65 t1_j3arwwe wrote

I don’t get it can someone explain

AndromedaAnimated t1_j3atztv wrote

I think the first joke was more witty, even though the second one is not bad.

footurist t1_j3b3mkw wrote

Would have been cool if it had actually continued the calculation beyond the overflow and printed the past date. But the math part would probably be a problem.

vert1s OP t1_j3b8zpw wrote

Yes, that's the exact day the problem will happen.

Ortus14 t1_j3bcp55 wrote

I laughed at the first joke. Ai's being afraid of newer Ai's isn't something I normally think about, although it is sometimes in science fiction.

noblesavage81 t1_j3bwklx wrote

The second joke is dark humor wtf 💀

Ohigetjokes t1_j3byc2f wrote

Same. My username was chosen ironically.

thetburg t1_j3byfd5 wrote

So we fixed Y2K by slapping another 38 years on the clock? We can't be that dumb so what am I not seeing?

sheerun t1_j3byfpa wrote

upgrade(:sense_of_humor)

vert1s OP t1_j3c41r6 wrote

They are different though similar problems. Y2K had more to do with entering dates as two digits. Where as the 2038 problem has more to do with the space that an epoch date takes within data storage. Particularly in strictly typed languages (e.g. C/C++).

Since date functions are usually libraries or built into languages newer versions almost always take this into account, since the problem has been know about for a while. Like Y2K the question becomes what legacy software (and in some cases hardware) is around that will end up breaking.

EbolaFred t1_j3c5g33 wrote

They are different problems.

Y2K happened because back when memory was expensive, programmers decided to use two digits to encode years to save space. This was OK because most humans normally only use two digits for years. Most smart developers knew it was wrong but figured their code wouldn't be around long enough to cause an eventual problem, so why not save some memory space.

Year 2038 is different. It's due to how Unix stores time using a 32-bit integer, which overflow in 2038.

Most modern OSs and databases have already switched to 64-bit, but, as usual, there's tons of legacy code to deal with. Not to mention embedded systems.

gangstasadvocate t1_j3d1brq wrote

I feel like for binary and bits and bytes 2048 would make more sense, when does 64-bit overflow, 4086?

EbolaFred t1_j3d6pew wrote

Yeah, sorry, you're not thinking of it correctly.

Unix time is the number of seconds since Jan 1, 1970. Which, in 2038, will be 2,147,483,648 seconds. This is the same as what a signed 32-bit integer can hold (10^32-1), hence the problem.

Switching to 64-bit can hold this timekeeping scheme for almost 300 billion years.

Note that this is just how Unix decided to keep time when it was being developed. There are obviously many newer implementations that get much more granular than "seconds since 1970" and last longer.. The problem is that many programs have standardized on how Unix does it, so programs know what to expect when calling time().

gangstasadvocate t1_j3d7jkw wrote

Still don’t completely understand what you mean by the 1032-1 but hell yeah that’s way more like it 300000,000,000 more years we’ll be long gone before then

its_brett t1_j3drlxn wrote

A joke is a display of humour in which words are used within a specific and well-defined narrative structure to make people laugh and is usually not meant to be interpreted literally.

Sashinii t1_j39a05c wrote

I laughed after the joke was explained.