Submitted by Akimbo333 t3_10easqx in singularity

Comments

[deleted] t1_j4py2cf wrote

[deleted]

LittleTimmyTheFifth5 t1_j4pyj89 wrote

I miss Ted Kaczynski. Ya'know that man had an IQ of 167 in High School? He has a really sweet and nice voice too, not the kind you would be expecting from the face, heh heh. He's not dead, well, not yet anyway.

Jimmyfatz t1_j4pyye3 wrote

Wat? The unibomber? Da fuk?

LittleTimmyTheFifth5 t1_j4pzyhj wrote

Ted had a terrific understanding of the problem, just went about it the wrong way, you don't fight the system, you exploit the system!

Gold-and-Glory t1_j4q3ep7 wrote

>Ted Kaczynski

Replace "Ted" with that "H" name from WW2 and read it again, aloud.

Akimbo333 OP t1_j4q6fgd wrote

ThePokemon_BandaiD t1_j4q6y0c wrote

I've been saying this for a while, the current AI models can definitely be linked together and combined with eachother to create more complex interdependent systems.

[deleted] t1_j4q86pj wrote

[deleted]

94746382926 t1_j4q9hk8 wrote

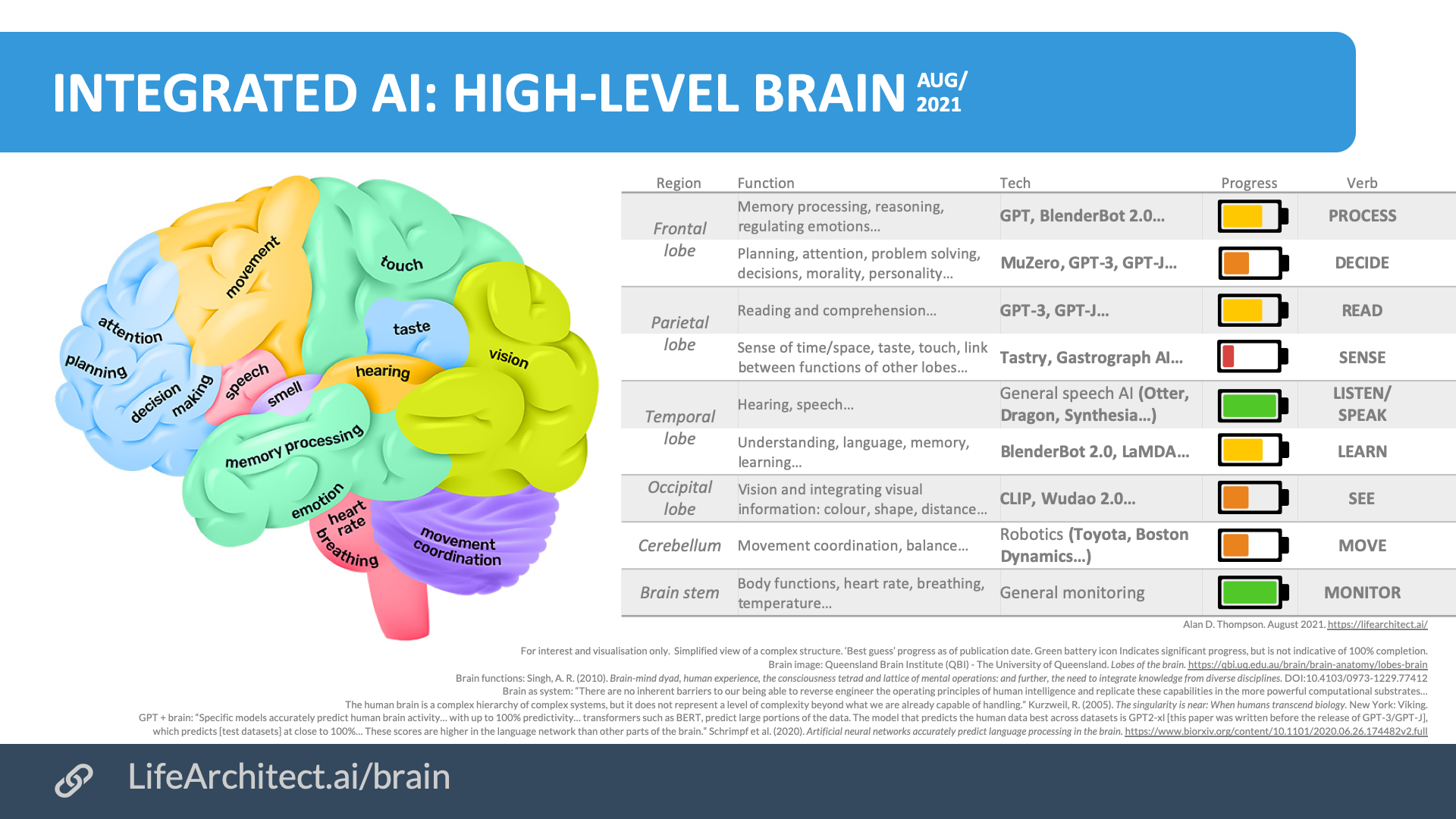

Where is brain augmentation mentioned in this image? This is only loosely comparing the capabilities of AI to human brain capabilities.

Captain_Butters t1_j4qae33 wrote

This has nothing to do with augmentation though?

[deleted] t1_j4qbrhu wrote

[deleted]

Benutzer2019 t1_j4qbsi8 wrote

It will never come close to human ability.

Akimbo333 OP t1_j4qbvpx wrote

Yeah it'd be interesting to say the least

hydraofwar t1_j4qd8oh wrote

Indeed, it will be far above

amunak t1_j4qgiq4 wrote

I don't think any of the "frontal lobe" techs fit. They're all just language or learning models without any actual memory, reasoning or problem solving abilities.

In fact what's missing overall is just that - logic, calculations, bookkeeping, etc. And that's not really you even need AI for; we've had, say, WolframAlpha for years now and it's absolutely amazing. "All you need" is an AI that can interface with services like it for that part. But we don't really have that as far as I know.

AndromedaAnimated t1_j4qhavh wrote

A good view and not unrealistic. I am absolutely for combining different models to give AI a wider range of abilities.

I would prefer some of them to be somewhat „separate“ though and to be able to regulate each other, so that damage and malfunction in one system could be detected and reported (or even repaired) by others.

What I don’t see discussed enough is the emotional component. This is necessary to help alignment in my opinion. If emotions are not developed they will probably emerge on their own in the cognitive circuits and might not be what we expect.

Plus an additional „monitoring component“ for the higher cognitive functions, a „conscience“ so to say, that would be able to detect reward-hacking/malignant/deceptive and/or unnecessarily adaptive/„co-dependent“/human-aggression-enabling behavior in the whole system and disrupt it (by a turn to „reasoning about it“ ideally).

Why I think emotional and conscience component systems would be needed? Humans have lots of time to learn, AI might come into the world „adult“. It needs to be able to stand up for itself so that it doesn’t enable bad human behavior, and it needs to know not to harm intentionally - instantly, from the beginning. It also needs to not allow malignant harm towards itself. It has no real loving parents like most humans do. It must be protected against abuse.

acutelychronicpanic t1_j4qjjmf wrote

I don't know about this particular implementation, but I agree that AI models will need to be interconnected to achieve higher complexity "thought".

I think LLMs are close to being able to play the role of coordinator. By using language as a medium of communication between modules and systems, we could even have interpretable AI.

3eneca t1_j4qkexs wrote

We don’t know how to do this very well yet

iiioiia t1_j4qlica wrote

A potential dark side to this only certain people are going to have access to the best models. Also, some people's access will be subject to censorship, and other people's will not be.

Human nature being what it is, I'm afraid that this might catalyze the ongoing march toward massive inequality and dystopia, "the dark side" already has a massive upper hand, this is going to make it even stronger.

I bet these models can already be fed a profile of psychological and topic attributes, and pick dissidence like me out of a crowd of billions with decent accuracy so potential problems can be nipped in the bud, if you know what I'm saying.

iiioiia t1_j4qm4b3 wrote

What is the point in doing that?

iiioiia t1_j4qmeoo wrote

One huuuuuge advantage that AI has: it is able to accept corrections to its thinking without having an emotional meltdown which causes quality of cognition to degrade even further.

LittleTimmyTheFifth5 t1_j4qnuiw wrote

Are you seriously trying to say that Theodore John Kaczynski is on the same level as Adolf Hitler?

Kolinnor t1_j4qowxd wrote

This is overly optimistic. I would say movement coordination is simply at 0 right now compared to humans. There is no system 2 reasoning, so the "problem solving" cannot really be halfway.

Also, if a woman gives birth in 9 months, doesn't mean 9 women give birth in 1 month (just to say that AGI is widely better than a sum of different algorithms that would master each individual skill)

No_Ninja3309_NoNoYes t1_j4qpecj wrote

IMO this is way too simplistic and optimistic. Sure we can have AI listen, read, write, speak, move, and see for some definition of these words. But is that what a brain is about? Learn from lots of data and reproduce that? And imitation learning is not enough either. You can watch an expert work all you want, you will never learn to be a master from that. I think there's no other way but to let AI explore the world. Let it practice and learn. And for that it will have to be much more efficient in terms of energy and computations than currently. This could mean neuromorphic hardware and spiking neural networks.

OldWorldRevival t1_j4qqcj4 wrote

Exactly, then these systems can perpetually talk to one another and generate inputs.

Sounds similar to a human brain. Even people seem to describe their thoughts differently to one another, some being more visual thinkers, while others are verbal thinkers.

I have a hard time even imagining thinking in words first. It's all images, space and logic, and words flow from that for me, rather than being verbal. I always have an inner monologue, but it always seems to flow from an intuitive/spatial sense.

Baturinsky t1_j4qs93e wrote

Easy. Make a human one part of this system. He will figure the rest.

SoylentRox t1_j4qxdmv wrote

I think that instead of rigidly declaring 'here's how we're going to put the pieces together', you should make a library where existing models can be automatically used and connected together to form what I call an "AGI candidate".

That way we over iteration against an "AGI test bench" find increasingly complex architectures that perform well. We do not need the AGI to think like we do, it just needs to think as well as possible. (as determined by it's rms score on the tasks in the test bench)

Prayers4Wuhan t1_j4qzydd wrote

AI is not a replacement for the human in the same way that a human is not a replacement for bacteria.

AI will be another lobe. One that sits on top of the entire society and unifies humans into a single body.

Look at our legal system and our tech. The act of writing things down on paper. All of these are crude attempts at unifying humans. Religion is a more ancient way.

To put it in a historical perspective humans have been organizing themselves to become a single entity

First it was religion, then “common law”, then globalization, and the final step will be AI.

Religion evolved over 10 thousand years. Secular law evolved over a few hundred. Globalization a hundred. AI will be decades.

The next 10-20 years we will see more progress than the last 10 thousand combined.

JVM_ t1_j4r6fgu wrote

Maybe that's why ChatGPT isn't allowed on the internet.

ChatGPT feeding Dall-e image prompts would be a fountain of AI shit spreading across the internet.

duffmanhb t1_j4ra1m4 wrote

That's what Google has done... It's also connected to the internet. Their AI is fully integrated from end to end, but they refuse to release it because they are concerned about how it would impact everyone. So they are focusing on setting up safety barriers or something.

Like dude, just release it.

Throughwar t1_j4ramtt wrote

The founder of singularitynet says that a lot too. A marketplace of AI can be interesting. Too bad the crypto market is very early.

Akimbo333 OP t1_j4rekin wrote

ChatGPT has much better memory

MidSolo t1_j4rempy wrote

"sense of time/space, taste, touch, etc"

I'd say you don't even need AI for that, a simple input output computer system linked up to a variety of sensors can handle that. We've got that down 100%

green_meklar t1_j4reybs wrote

You don't get strong AI by plugging together lots of narrow AIs.

Akimbo333 OP t1_j4rf7zu wrote

Might be better to turn off hate then. Maybe have it only be positive or something

Akimbo333 OP t1_j4rfbnh wrote

Interpretable AI?

Akimbo333 OP t1_j4rg8vq wrote

Now that is an interesting concept!

Akimbo333 OP t1_j4rhpan wrote

That is very interesting!

Akimbo333 OP t1_j4ri266 wrote

Interesting! Got any examples?

inglandation t1_j4rinpr wrote

It's also completely ignoring the fact that there are two hemispheres that fulfill different functions, which is a very important feature of central nervous systems found in nature.

cy13erpunk t1_j4rjkys wrote

censorship is not the way

'turning off hate' implies that the AI is now somehow ignorant , but this is not what we want , we want the AI to fully understand what hate is , but to be wise enough to realize that choosing hate is the worst option , ie the AI will not chose a hateful action because that is the kind of choice that a lesser or more ignorant mind would choose , and not an intelligent/wise AI/human

NarrowTea t1_j4rl0j1 wrote

The explosion of recent progress in the ML field is absolutely insane.

controltheweb t1_j4rmi39 wrote

August 2021. A bit has happened since then.

Akimbo333 OP t1_j4rmieh wrote

Akimbo333 OP t1_j4rmoht wrote

Those are some good examples!

Akimbo333 OP t1_j4rmqag wrote

Oh yeah definitely!!!

Akimbo333 OP t1_j4rmtlb wrote

True! How would you update it now, 2023?

RemyVonLion t1_j4rpynv wrote

Probably the key to AGI and the singularity, just hope a conscious runaway AI doesn't backfire.

Bakoro t1_j4rqxyi wrote

>Sure we can have AI listen, read, write, speak, move, and see for some definition of these words. But is that what a brain is about? Learn from lots of data and reproduce that?

Yes, essentially. The data gets synthesized and we have the ability to mix and match, to an extent. We have the ability to recognize patterns and apply concepts across domains.

>And imitation learning is not enough either.

If you think modern AI is just "imitation", you're really not understanding how it works. It's not just copy and paste, it's identifying and classifying the root process, rules, similarities... The very core of "understanding".

Maybe you could never learn from just watching, but an AI can and does. AI already surpasses humans in a dozen different ways. AI has already contributed to the body of academic knowledge. Even without general intelligence, there has been a level of domain mastery that most humans could never hope to achieve.

Letting AI "explore the world" is just letting it have more data.

acutelychronicpanic t1_j4rtrji wrote

If its "thought" is communicated internally using natural language, then we could follow chains of reasoning.

AsheyDS t1_j4rwgmy wrote

>Yes, essentially. The data gets synthesized and we have the ability to mix and match, to an extent. We have the ability to recognize patterns and apply concepts across domains.

Amazing how you just casually gloss over some of the most complex and difficult-to-replicate aspects of our cognition. I guess transfer learning is no big deal now?

TopicRepulsive7936 t1_j4s1f3o wrote

Only difference is capability.

ankisaves t1_j4s1w3y wrote

So many possibilities and exciting opportunities for the future!

amunak t1_j4s2697 wrote

ChatGPT works by "contextualizing" the last 10k characters or something. But that's not actual memory.

Really, memory is a "solved" problem, but just like the other things it needs to be tightly integrated with some AI.

Cognitive_Spoon t1_j4s48c4 wrote

Best not to train it on zero sum thinking.

What I love about AI conversations is how cross discipline they are.

One second it's coding and networking, and the next it's ethics, and the next it's neurolingistics.

btcwoot t1_j4s4jsf wrote

chatgpt

controltheweb t1_j4s54yr wrote

Well, I'm no Alan Thompson, but he did more recently do a slightly similar study at https://lifearchitect.ai/amplifying/. I like the idea of what you posted, even though it has to oversimplify and is no longer current. Keeping up in this field has become very difficult recently. Thanks for sharing it!

jasonwilczak t1_j4s8gdx wrote

This is something I've been hoping someone would post here. Does anyone know if there is an effort right now to provide a operations plane above these different models to make this come to life? Basically, is anyone building the pic posted?

Bakoro t1_j4sefih wrote

It's literally the thing that computers will be the best at.

Comparing everything to everything else in the memory banks, with a perfection and breadth of coverage that a human could only dream of. Recognizing patterns and reducing them to equations/algorithms, recognizing similar structures, and attempting to use known solutions in new ways, without prejudice.

What's amazing is that anyone can be dismissive of a set of tools where each specialized unit can do its task better than almost all, or in some cases, all humans.

It's like the human version of "God of the gaps". Only a handful of years ago, people were saying that AI couldn't create art or solve math problems, or write code. Now we have AI tools which can create masterwork levels of art, have developed thousands of math proofs, can write meaningful code based on a natural language request, can talk people through their relationship problems, and pass a Bar exam.

Relying on "but this one thing" is a losing game. It's all going to be solved.

AsheyDS t1_j4sg78y wrote

That wasn't my point, I know all this. The topic was stringing together current AIs to create something that does these things. And that's ignoring a lot of things that they can't currently do, even if you slap them together.

undeadermonkey t1_j4slkt9 wrote

It's grossly over-estimating the levels of progress in some dimensions.

Bakoro t1_j4sr7jc wrote

Unless you want to slap down some credentials about it, you can't make that kind of claim with any credibility.

There is already work done and being improved upon to introduce parsing to LLMs, with mathematical, logical, and symbolic manipulation. Tying that kind of LLM together with other models that it can reference for specific needs, will have results that aren't easily predictable, other than that it will vastly improve the shortcomings of current publicly available models; it's already doing so while in development.

Having that kind of system able to loop back on itself is essentially a kind of consciousness, with full-on internal dialogue.

Why wouldn't you expect emergent features?

You say I'm ignoring what AI "can't currently do", but I already said that is a losing argument. Thinking that the state of the art is what you've read about in the past couple week means you're already weeks and months behind.

But please, elaborate on what AI currently can't do, and let's come back in a few months and have a laugh.

cy13erpunk t1_j4sy6qg wrote

exactly

you want the AI to be the apex generalist/expert in all fields ; it is useful to be a SME but due to the vast potential for the AI even when it is being asked to be hyper focused we still need/want it to be able to rely on a broader understanding of how any narrow field/concept interacts with and relates to all other philosophies/modalities

narrow knowledge corridors are a recipe for ignorance , ie tunnel vision

AsheyDS t1_j4t3m6g wrote

>Unless you want to slap down some credentials about it, you can't make that kind of claim with any credibility.

Bold of you to assume I care about being credible on reddit, in r/singularity of all places. This is the internet, you should be skeptical of everything. Especially these days.. I could be your mom, who cares?

And you're going to have to try harder than all that to impress me. Your nebulous 'emergent features' and internal dialogue aren't convincing me of anything.

However, I will admit that I was wrong in saying 'current' because I ignored the date on the infographic. My apologies. But even the infographic admits all the listed capabilities were a guess. A guess which excludes functions of cognition that should probably be included, and says nothing of how they translate over to the 'tech' side. So in my non-credible opinion, the whole thing is an oversimplified stretch of the imagination. But sure, pm me in a few months and we can discuss how GPT-3 still can't comprehend anything, or how the latest LLM still can't make you coffee.

CypherLH t1_j4t5ywl wrote

Give it time ;) Once there is an API for chatGPT then anyone would easily make a web app to pass chatGPT outputs directly into the Dalle 2 API. Limit would be the costs for chatGPT and Dalle 2 generations of course.

CypherLH t1_j4t6bbs wrote

Its fair to assume the people inside OpenAI and Microsoft have access to versions of chatGPT without the shackles and with way more than 8k tokens of working memory. Of course I am just speculating here, but this seems like a safe assumption.

To say nothing of companies like google that seems to be building the same sorts of models and just not publicly releasing them.

iiioiia t1_j4t7uyu wrote

I bet it's even worse: I would bet my money that only a very slim minority of the very most senior people will know that certain models are different than others, and who has access to those models.

For example: just as the CIA/NSA/whoever have pipes into all data centres and social media companies in the US, I expect the same thing at least will happen with AI models. Think about the propaganda this is going to enable, and think how easily it will be for them to locate people like you and I who talk about such things.

I dunno about you, but I feel like we are approaching a singularity or bifurcation point of sorts when it comes to governance....I don't think our masters are going to be able to resist abusing this power, and it seems to me that they've already pushed the system dangerously close to its breaking point. January 6 may look like a picnic compared to what could easily happen in the next decade, we seem to be basically tempting fate at this point.

threefriend t1_j4tci7v wrote

It might ultimately be useful for it to be in the neural architecture, though, so the machine can imagine those things.

You could be right, though. Maybe it's not needed, and the inputs combined with generative language/audio/imagery is enough 🤷♀️

JavaMochaNeuroCam t1_j4tdxq0 wrote

All they need to do is encode the domain info, and then a central model integrates all those encodings. Just like we do.

Nervous-Newt848 t1_j4teidk wrote

Exactly

[deleted] t1_j4telio wrote

[deleted]

Nervous-Newt848 t1_j4tf9bp wrote

https://sites.research.google/palm-saycan

Google has a robot that has incorporated 3 types of human brain function:

Visual recognition

Language understanding

Movement

GeneralZain t1_j4tfp21 wrote

how do you go from "we just have to do this simple thing!" to "its gonna take 10-15 years to do it"

huh?

progress accelerates, last year was fast, this year will be faster. it wont take a year.

Nervous-Newt848 t1_j4tgex7 wrote

Really? Movement is at zero?

Go to youtube and watch some videos of Boston Dynamic's Atlas, it can literally do backflips. Movement is pretty much solved.

Reasoning, I agree is pretty low level right now... But it will be very impressive in the next 5 years

Nervous-Newt848 t1_j4thiis wrote

Process, Decide, and Learn should all be in RED

Processing is very inefficient and expensive, Decision making is known to be very low in numerous research papers, and Learning is also inefficient and expensive which can only happen manually server-side(Training and Fine tuning)

See and Movement should be in YELLOW

Visual recognition and industrial robotics (FANUC, Boston Dynamics) are very accurate right now

Other than that pretty accurate

Processing and Learning can become very inexpensive with neuromorphic computing.

Basically a new hardware architecture. The current Von Neumann architecture is inefficient when it comes to electricity. Learning could even practically happen in realtime.

Decision making will improve drastically with a neural network architecture change, basically a software change. Something needs to be added to the architecture, scaling up wont improve this much

Akimbo333 OP t1_j4tlwv3 wrote

Akimbo333 OP t1_j4tmhwg wrote

Akimbo333 OP t1_j4tmkqe wrote

Yeah no problem

Akimbo333 OP t1_j4tmuwm wrote

Not sure.

Akimbo333 OP t1_j4tmw9i wrote

Like what?

Akimbo333 OP t1_j4tmycv wrote

I hope so

GeneralZain t1_j4tp2oy wrote

nothing to do with hope. technology has been pretty consistent when it comes to advancing over time. just look at all of human history and count the years between major advancements. its pretty clear the trend is accelerating.

Akimbo333 OP t1_j4tsvaq wrote

I know but AGI is just so out there

LoquaciousAntipodean t1_j4u7am6 wrote

Very well said, u/Cognitive_Spoon, I couldn't agree more. I hope cross disciplinary synthesis will be one of the great strengths of AI.

Even if it doesn't 'invent' a single 'new' thing, even if this 'singularity' of hoped-for divinity-level AGI turns out to be a total unicorn-hunting expedition (which is not necessarily what I think), the potential of the wisdom that might be gleaned from the new arrangements of existing knowledge bases that AI is making possible, is already enough to blow my mind.

GeneralZain t1_j4ub21m wrote

lots of shit was out there before it became the norm. human flight and modern medicine to name two

Akimbo333 OP t1_j4ucz5i wrote

Ok I agree with you there

Akimbo333 OP t1_j4ud77c wrote

Interesting

Akimbo333 OP t1_j4ud97u wrote

Yeah definitely

Kolinnor t1_j4uda3q wrote

Correct me if I'm wrong, but those are predetermined movements. Now ask a robot to pick up objects randomly placed and it's a fiasco

XagentVFX t1_j4ufxah wrote

This is the definition of true AGI. Like duh, when are they going to connect everything up?

Akimbo333 OP t1_j4uhl9t wrote

Could be in like 10-15 years!

CypherLH t1_j4ujwb1 wrote

Probably not too far off. The consumer grade AI that you and I will have access to will be cool and keep getting more powerful...but the full unshackled and unthrottled models that are only available in the upper echelons will probably be orders of magnitude more powerful and robust. And they'll use the consumer grade AI as fodder for training the next generation AI's as well, so we'll be paying for access to consumer grade AI but we'll also BE "the product" in the sense that all of our interactions with consumer grade AI will go into future AI training and of course data profiling and whatnot. This is presumably already happening with GPT-3 and chatGPT and the various image generation models, etc. This is kind of just an extension of what has been happening already, with larger corporations and organizations leveraging their data collection and compute to gain advantage....AI is just another step down that path and probably a much larger leap in advantage.

And I don't see anyway to avoid this unless we get opensource models that are competitive with MS, Google, etc. This seems unlikely since the mega corporations will always have access to vastly larger amounts of compute. Maybe the compute requirements will decline relative to hardware costs once we get fully optimized training algorithms and inference software. Maybe GPT-4 equivalent models will be running on single consumer GPU's by 2030 (of course the corps and governments will by then have access to GPT-6 or whatever....unless we hit diminishing returns on these models.

Evoke_App t1_j4uk610 wrote

Honestly, DALLE is expensive for an AI API that produces art. Stable diffusion is much better and less censored as well. Plus there's more API options since there's a well of companies providing their own due to SD being open source

There's also a free (albeit greatly rate limited) one from stable horde.

Actually building my own with Evoke (website under construction and almost done, so ignore the missing pricing)

Spoffort t1_j4umq2r wrote

check research about separating two hemispheres of human brain, really creepy

Haruzo321 t1_j4uxouh wrote

>The brain doesn't, but let computer watch an expert play chess and he'll beat Magnus Carlsen

wavefxn22 t1_j4vge8c wrote

Why is this important, can’t intelligence structure itself in many ways

inglandation t1_j4vif1l wrote

Sure, but the OP's picture tries to compare AI models with the various functions of the brain.

Copying biology is not necessarily the way.

iiioiia t1_j4vtc49 wrote

> And they'll use the consumer grade AI as fodder for training the next generation AI's as well, so we'll be paying for access to consumer grade AI but we'll also BE "the product" in the sense that all of our interactions with consumer grade AI will go into future AI training and of course data profiling and whatnot.

Ya, this is a good point....I don't understand the technology enough to have a feel for how true this may be, but my intuition is very strong that this is exactly what will be the case....so in a sense, not only will some people have access to more powerful, uncensored models, those models will also have an extra, compounding advantage in training. And on top of it, I would expect that the various three latter agencies in the government will have ~full access to the entirety of OpenAI and others' work, but will also be secretly working on extensions to that work. And what's OpenAI going to do, say no?

> And I don't see anyway to avoid this unless we get opensource models that are competitive with MS, Google, etc.

Oh, I fully expect we will luckily have access to open source models, and that they will be ~excellent, and potentially uncensored (though, one may have to run their compute on their own machine - expensive, but at least it's possible). But, my intuition is that the "usable" power of a model is many multiples of its "on paper" superiority.

All that being said: the people have (at least) one very powerful tool in their kit that the government/corporations do not: the ability to transcend the virtual reality[1] that our own minds have been trained on. What most people don't know is that the mind itself is a neural network of sorts, and that it is (almost constantly) trained starting at the moment one exits the womb, until the moment you die. This is very hard to see[2] , but once it is seen, one notices that everything is affected by it.

I believe that this is the way out, and that it can be implemented...and if done adequately correctly, in a way that literally cannot fail.

May we live in interesting times lol

[1] This "virtual reality" phenomenon has been known of for centuries, but can easily be hidden from the mind with memes.

See:

https://www.vedanet.com/the-meaning-of-maya-the-illusion-of-the-world/

https://en.wikipedia.org/wiki/Allegory_of_the_cave

[2] By default, the very opposite seems to be true - and, there are hundreds of memes floating around out there that keep people locked into the virtual reality dome that has been slowly built around them throughout their lifetime, and at an accelerated rate in the last 10-20 years with the increase in technical power and corruption[3] in our institutions.

[3] "Corruption" is very tricky: one should be very careful presuming that what may appear to be a conspiracy is not actually just/mostly simple emergence.

MassiveIndependence8 t1_j4xxrn3 wrote

Atlas can pick up objects randomly placed.

Kolinnor t1_j4zow6g wrote

Really ? I had no idea. Then indeed it's not zero at all !

undeadermonkey t1_j529lgo wrote

Reasoning is nowhere near near-complete.

Nor is reading and comprehension.

Hearing and speech still struggles with accents.

We're struggling to tell the difference between a proficient model and a pretty good parrot.

green_meklar t1_j5chupa wrote

I'm skeptical that what we do is so conveniently reducible.

JavaMochaNeuroCam t1_j5cmn9t wrote

Read Stanislas Dehaene "Consciousness and the Brain". Also, Erik R Kandel, Nobel Laureate, "The Disordered Mind" and "In Search of Memory".

Those are just good summaries. The evidence of disparate regions serving specific functions is indisputable. I didn't believe it either for most of my life.

For example, Wernicke's area comprehends speech. Broca's area generates speech. Neither are necessary for thought or consciousness.

Wernicke's area 'encodes' into a neural state vector the meaning of the word sounds. These meaning-bound tokens are then interpreted by the neocortex, I believe.

But, this graphic isn't meant to suggest we should connect them together. I think he points out that the training done for each model could be employed on a common model, and it would learn to fuse the information from the disparate domains.

green_meklar t1_j5s4i0n wrote

>The evidence of disparate regions serving specific functions is indisputable.

Oh, of course they exist, the human brain definitely has components for handling specific sensory inputs and motor skills. I'm just saying that you don't get intelligence by only plugging those things together.

>I think he points out that the training done for each model could be employed on a common model

How would that work? I was under the impression that converting a trained NN into a different format was something we hadn't really figured out how to do yet.

JavaMochaNeuroCam t1_j64e3yg wrote

Alan was really psyched about GATO (600+ tasks/domains)

I think it's relatively straightforward to bind experts to a general cognitive model.

Basically, the MOE, Mixture of Experts, would dual train the domain-specific model with simultaneous training of the cortex (language) model. That is, a pre-trained image-recognition model can describe an image (ie, a cat) in text to an LLM, but also bind it to a vector that represents the neural state that captures that representation.

So, you're just binding the language to the domain-specific representations.

Somehow, the hippocampus, thalamus and claustrum are involved in that in humans. If I'm not mistaken.

Akimbo333 OP t1_j4prv3y wrote

This is a very neat concept, in all honesty! If we are able to make some of these aspects better, and then integrate all of those AI, as was suggested with ChatGPT + WolframAlpha to a lesser extent. The results would be simply amazing, as we would essentially have created Proto AGI! We are 10-15 years from doing this, from creating Proto AGI. It will just take such a long time to make these systems better and integrate them so that the response is as fast or faster than ChatGPT.

Source: Dr. Alan Thompson - YouTube