Submitted by radi-cho t3_11izjc1 in MachineLearning

Comments

deekaire t1_jb1jd2i wrote

Great comment 👍

askljof t1_jb4bkf0 wrote

Amazing reply 🤝

Delster111 t1_jb4z3qm wrote

fantastic discourse

Adamanos t1_jb542kw wrote

immaculate dialogue

FeministNeuroNerd t1_jb54wql wrote

Superb interchange

knight1511 t1_jb5975w wrote

Exquisite deliberation

Elon_Muskoff t1_jb59xxp wrote

Stack Overload

speyside42 t1_jb425d4 wrote

A good mixture is key. Independent applied research will show whether the claims of slight improvements hold in general. A counter example where "this kind of research" has failed us are novel optimizers.

PassionatePossum t1_jb4977c wrote

Agreed. Sometimes theoretical analysis doesn't transfer to the real world. And sometimes it is also valuable to see a complete system. Because the whole training process is important.

However, since my days in academia are over, I am much less interested in getting the next 0.5% of performance out of some benchmark dataset. In industry you are way more interested in a well-working solution that you can produce quickly instead of the best-performing solution. So, I am way more interested in a tool set of ideas that generally work well and ideally a knowledge of what the limitations are.

And yes, while papers about applications can provide practical validation of these ideas, very few of these papers conduct proper ablation studies. And in most cases it is also too much to ask. Pretty much any application is a complex system with an elaborate pre-processing and training procedure. You cannot practically evaluate the influence of every single step and parameter. You just twiddle around with the parameters you deem to be most important and that is your ablation study.

radi-cho OP t1_jb0oopy wrote

Chadssuck222 t1_jb15xxk wrote

Noob question: why title this research as ‘reducing under-fitting’ and not as ‘improving fitting of the data’?

Toast119 t1_jb16zt9 wrote

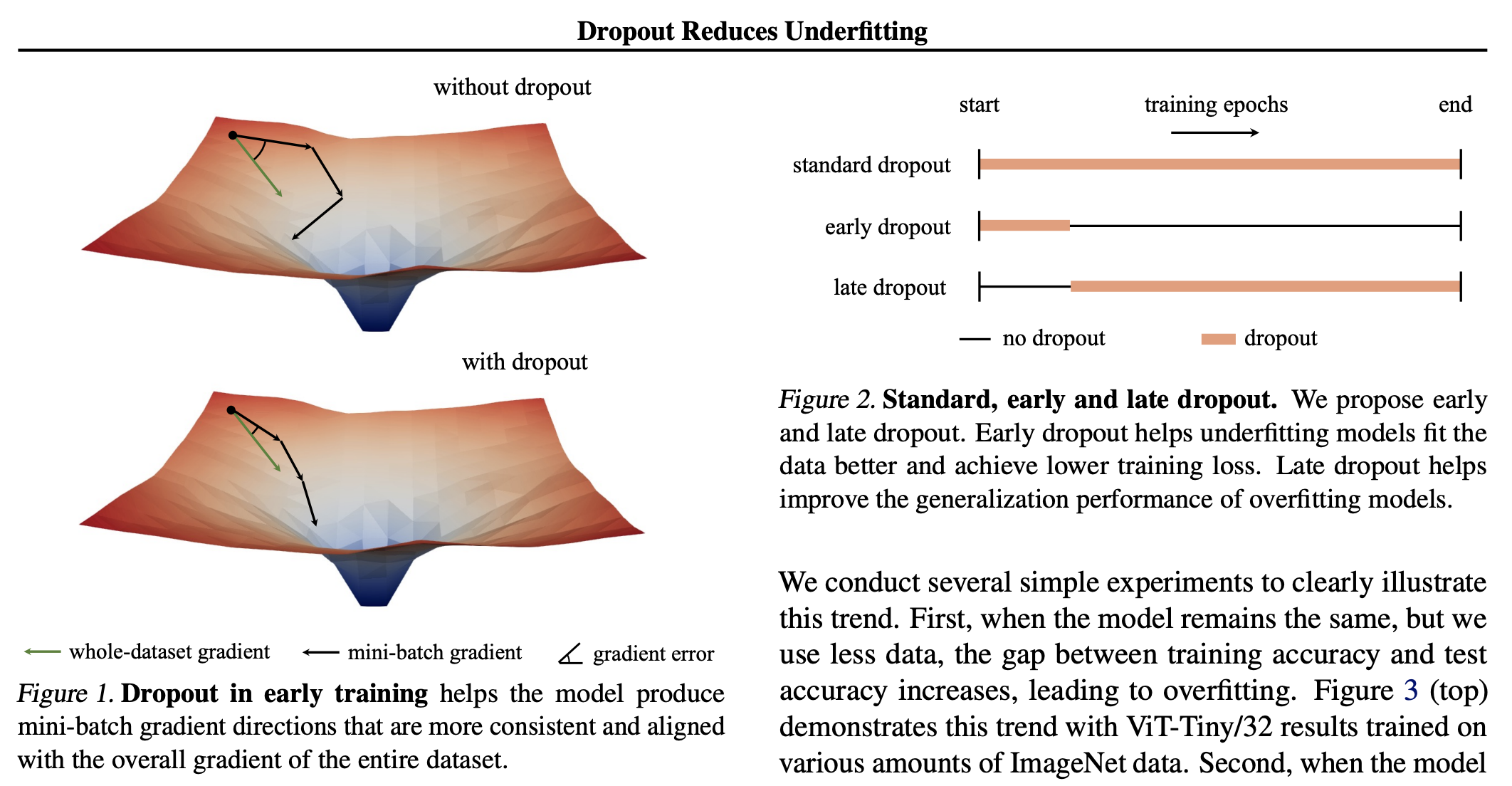

I think it's because Dropout is usually seen as a method for reducing overfitting and this paper is claiming and supporting that it is also useful for reducing underfittting as well.

farmingvillein t1_jb18evq wrote

Yes. In the first two lines of the abstract:

> Introduced by Hinton et al. in 2012, dropout has stood the test of time as a regularizer for preventing overfitting in neural networks. In this study, we demonstrate that dropout can also mitigate underfitting when used at the start of training.

[deleted] t1_jb19gqq wrote

[deleted]

BrotherAmazing t1_jb37vx3 wrote

It’s sort of a “clickbait” title I didn’t like myself even if it’s a potentially interesting paper.

Usually we assume dropout helps prevent overfitting, not help with underfitting, but the thing I don’t like about the title is it makes it sound like dropout helps with underfitting in general. It does not and they don’t even claim it does—even by the time you finish reading their Abstract you can tell that they’re only saying dropout has been observed to help with underfitting in certain circumstances when used in certain ways only.

I can come up with low dimensional counter-examples where dropout won’t help you when you’re underfitting, and will necessarily be the cause of the underfitting for example.

[deleted] t1_jb1vdtd wrote

[removed]

ElleLeonne t1_jb16hic wrote

Maybe it hurts generalization? ie, causes overfitting?

There could even be a second paper in the works to address this question

[deleted] t1_jb2acxm wrote

[deleted]

tysam_and_co t1_jb0x0e7 wrote

Interesting. This seems related to https://arxiv.org/abs/1711.08856.

tysam_and_co t1_jb2kgo2 wrote

Hold on a minute. On reading through the paper again, this section stood out to me:

>Bias-variance tradeoff. This analysis at early training can be viewed through the lens of the bias-variance tradeoff. For no-dropout models, an SGD mini-batch provides an unbiased estimate of the whole-dataset gradient because the expectation of the mini-batch gradient is equal to the whole-dataset gradient. However, with dropout, the estimate becomes more or less biased, as the mini-batch gradients are generated by different sub-networks, whose expected gradi- ent may not match the full network’s gradient. Nevertheless, the gradient variance is significantly reduced, leading to a reduction in gradient error. Intuitively, this reduction in variance and error helps prevent the model from overfitting to specific batches, especially during the early stages of training when the model is undergoing significant changes

Isn't this backwards? It's because of dropout that we should receive _less_ information from each iteration update, which means that we should be _increasing_ the variance of the model with respect to the data, not decreasing it. We've seen in the past that dropout greatly increases the norm of the gradients over training -- more variance. And we can't possibly add more bias to our training data with random I.I.D. noise, right? Shouldn't this effectively slow down the optimization of the network during the critical period, allowing it to integrate over _more_ data, so now it is a better estimator of the underlying dataset?

I'm very confused right now.

amhotw t1_jb38ai5 wrote

Based on what you copied: they are saying that dropout introduces bias. Hence, it reduces the variance.

Here is why it might be bothering you: bias-variance trade-off makes sense if you are on the efficient frontier, ie cramer-rao bound should hold with equality for trade-off to make sense. You can always have a model with a higher bias AND a higher variance; introducing bias doesn't necessarily reduce the variance.

tysam_and_co t1_jb3i6eq wrote

Right, right, right, though I don't see how dropout introduces bias into the network. Sure, we're subsampling the network in general, but overall the information integrated with respect to a minibatch should be less on the whole due to gradient noise, right? So the bias should be less and as a result we have more uncertainty, then more steps equals more integration time of course and on we go from there towards that elusive less-biased estimator.

I guess the sticking point is _how_ they're saying that dropout induces bias. I feel like fitting quickly in a non-regularized setting has more bias by default, because I believe the 0-centered noise should end up diluting the loss signal. I think. Right? I find this all very strange.

Hiitstyty t1_jb4wjnj wrote

It helps to think of the bias-variance trade off in terms of the hypothesis space. Dropout trains subnetworks at every iteration. The hypothesis space of the full network will always contain (and be larger) than the hypothesis space of any subnetwork, because the full network has greater expressive capacity. Thus, the full network can not be any less biased than any subnetwork. However, any subnetwork will have reduced variance because of its smaller relative hypothesis space. Thus, dropout helps because its reduction in variance offsets its increase in bias. However, as the dropout proportion is set increasingly higher, eventually the bias will be too great to overcome.

RSchaeffer t1_jb26p98 wrote

Lucas Beyer made a relevant comment: https://twitter.com/giffmana/status/1631601390962262017

"""

​

The main reason highlighted is minibatch gradient variance (see screenshot).

This immediately asks for experiments that can validate or nullify the hypothesis, none of which I found in the paper

​

"""

jobeta t1_jb1otw2 wrote

Neat! What's early s.d. in the tables in the github repo?

alterframe t1_jb1txte wrote

Early stochastic depth. That's where you take a ResNet and randomly drop residual connections so that the effective depth of the network randomly changes.

WandererXZZ t1_jb3wyfc wrote

It's actually, for every layer in the ResNet, dropping everything else except residual connections with a probability p. See this paper Deep Network with Stochastic Depth

alterframe t1_jb4nwrt wrote

Yes, I worded it badly.

jobeta t1_jb3hh74 wrote

This is cool and I haven’t finished reading it yet but, intuitively, isn’t that roughly equivalent to have a higher learning rate in the beginning? You make the learning algorithm purposefully imprecise at the beginning to explore quickly the loss landscape and later on, once a rough approximation of a minimum has been found, you are able to explore more carefully to look for a deeper minimum or something? Like the dropout introduces noise doesn’t it?

Delacroid t1_jb4c3xt wrote

I don't think so. If you look at the figure and check the angle between whole dataset backprop and minibatch backprop, increasing the learning rate wouldn't change that angle. Only the scale of the vectors.

Also, dropout does not (only) introduce noise, it prevents coadaptation of neurons. In the same way that in random forest each forest is trained on a subset on the data (bootstrapping I think it's called) the same happens for neurons when you use dropout.

I haven't read the paper but my intuition says thattthe merit of dropout for early stages of training could be that the bootstrapping is reducing the bias of the model. That's why the direction of optimization is closer to the whole dataset training.

BrotherAmazing t1_jb36ydw wrote

Not a fan of the title they chose for this paper, as it’s really “Dropout can reduce underfitting” and not that it does in general.

Otherwise it may be interesting if this is re-produced/verified.

Mr_Smartypants t1_jb3tlu5 wrote

> We begin our investigation into dropout training dynamics by making an intriguing observation on gradient norms, which then leads us to a key empirical finding: during the initial stages of training, dropout reduces gradient variance across mini-batches and allows the model to update in more consistent directions. These directions are also more aligned with the entire dataset’s gradient direction (Figure 1).

Interesting. Has anyone looked at optimally controlling the gradient variance with other means? I.e. minibatch size?

alterframe t1_jb6ye5w wrote

Anyone noticed this with weight decay too?

For example here: GIST

It's like larger weight decay provide regularization which lead to slower training as we would expect, but setting lower weight decay makes the training even faster, than the one without any decay at all. I wonder if it may be related.

cztomsik t1_jb995yy wrote

And maybe also related to lr decay?

Also interesting thing is random sampling - at least at the start it seems to help when training causal LMs.

alterframe t1_jb9i70h wrote

Interesting. With many probabilistic approaches, where we have some intermediate variables in a graph like X -> Z -> Y, we need to introduce sampling on Z to prevent mode collapse. Then we also decay the entropy of this sampler with temperature.

This is quite similar to this early dropout idea, because there we also have some sampling process that effectively works only at the beginning of the training. However, in those other scenarios, we rather attribute it to something like exploration vs. exploitation.

If we had an agent that almost immediately assigns very high probability to a bad initial actions, then it may be never able find a proper solution. On a loss landscape in worst case scenario we can also end up in a local minimum very early on, so we use higher lr at the beginning to make it less likely.

Maybe in general random sampling could be safer than using higher lr? High lr can still fail for some models. If, by parallel, we do it just to boost early exploration, then maybe randomness could be a good alternative. That would kind of counter all claims based on analysis of convex functions...

cztomsik t1_jbgexxt wrote

Another interesting idea might be to start training with smaller context len (and bigger batch size - together with random sampling)

If you think about it, people also learn the noun-verb pairs first and then go to sentences and then to longer paragraphs/articles, etc. And it's also good if we have a lot of variance at this early stages.

So it makes some sense, BERT MLM is also very similar to what people do when learning languages :)

alterframe t1_jcn87ue wrote

Do you have any explanation why on Figure 9 the training loss decrease slower for the early dropout? The previous sections are all about how reducing variance in the mini-batch gradients, allows us to travel longer distance in the hyperparameter space (Figure 1 from the post). It seems that it is not reflected in the value of the loss.

Any idea why? It catches up very quickly after the dropout is turned off, but I'm still curious about this behavior.

[deleted] t1_jb0sjkb wrote

[deleted]

[deleted] t1_jb2yygm wrote

[removed]

szidahou t1_jb19y51 wrote

How can authors be confident that this phenomenon is generally true?

Zealousideal_Low1287 t1_jb1ce8q wrote

IDK did you read it?

HillaryPutin t1_jb1ovl4 wrote

Bro sounds like the discussion comments in some of my university courses

TheCockatoo t1_jb1x9k4 wrote

Found reviewer #2. Read the paper!

xXWarMachineRoXx t1_jb1qzsm wrote

-

Whats [R] and [N] in title ?

-

Whats a dropout??

JEFFREY_EPSTElN t1_jb1usu4 wrote

-

Research, News

-

A regularization technique for training neural networks https://en.wikipedia.org/wiki/Dilution_(neural_networks)

WikiSummarizerBot t1_jb1uuaw wrote

>Dilution and dropout (also called DropConnect) are regularization techniques for reducing overfitting in artificial neural networks by preventing complex co-adaptations on training data. They are an efficient way of performing model averaging with neural networks. Dilution refers to thinning weights, while dropout refers to randomly "dropping out", or omitting, units (both hidden and visible) during the training process of a neural network. Both trigger the same type of regularization.

^([ )^(F.A.Q)^( | )^(Opt Out)^( | )^(Opt Out Of Subreddit)^( | )^(GitHub)^( ] Downvote to remove | v1.5)

[deleted] t1_jb1xcfg wrote

[removed]

[deleted] t1_jb1xdiw wrote

[removed]

PassionatePossum t1_jb0xvdo wrote

Thanks. I'm a sucker for this kind of research: Take a simple technique and evaluate it thoroughly, varying one parameter at a time.

It often is not as glamourous as some of the applied stuff. But IMHO these papers are a lot more valuable. With all the applied research papers, all you know in the end that someone had better results. But nobody knows where these improvements actually came from.